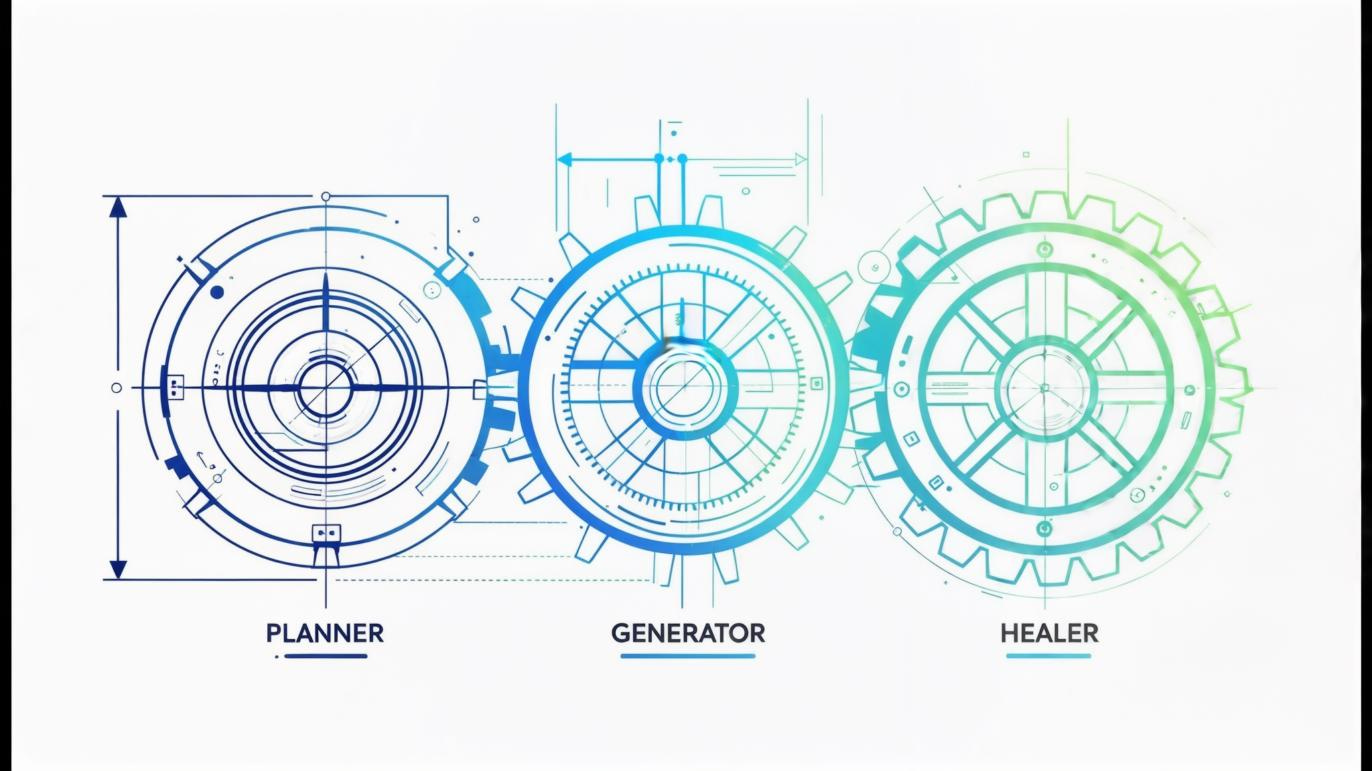

Playwright just released Planner, Generator and Healer agents. Here's what that means for your test stack

Playwright just shipped three capabilities they’re calling agents: Planner (selects which tests to run based on changes), Generator (creates tests from recordings and prompts), and Healer (repairs selectors automatically when UI changes). All three are built into the runner. No separate platform subscription. No plugins to wire together.

This matters because most test frameworks still require you to compose these capabilities from different vendors. Selenium teams add Launchable for planning and Healenium for healing. Cypress teams subscribe to Cypress Cloud for orchestration. WebdriverIO teams patch together plugins. Platform vendors like mabl and testRigor bundle everything but charge accordingly.

Playwright just consolidated three vendor categories into the core runner. If you’re evaluating tools or defending your current stack, the comparison just shifted.

The timing is critical. AI can now generate test suites in hours instead of weeks. That speed creates a new problem: flaky tests at scale. When you can create hundreds of tests in a day, you need industrial-strength healing and planning, or you’ll drown in false failures. Agent capabilities promise less maintenance overhead, but the trade-off is new complexity: healing logic that needs auditing, planning algorithms that need validation, and generated tests that can inherit flakiness at scale. The question is where they live in your stack, what they cost, and whether your team can troubleshoot them when they break.

What Planner, Generator and Healer actually do

Planner selects which tests to run based on code changes, historical failures and flakiness patterns. Done well, you can significantly reduce CI time without losing signal. Playwright’s implementation analyses dependency graphs and test history. Alternatives like Launchable and Harness do similar analysis but require separate integration and subscription.

Generator creates or updates tests from recordings, user flows or natural language prompts. Playwright’s Codegen tool and new AI-assisted generation can scaffold tests quickly. The catch: AI-assisted generation is now fast enough to be dangerous. It produces hundreds of tests before you notice they’re brittle. It won’t write perfect assertions, but it gets you most of the way there in a fraction of the time.

Healer adapts to minor UI changes by re-locating elements and adjusting waits. Playwright’s trace viewer now suggests selector fixes automatically. It keeps tests green through trivial selector churn. The catch: when healing fails or makes the wrong guess, someone needs to debug why. If your team can read code and use browser DevTools, this is manageable. If you’re fully no-code, you’re dependent on vendor support to explain what broke.

The critical difference between tools isn’t just whether these capabilities exist. It’s where they live (core runner, cloud platform, or plugin), and whether your team can see inside the logic when something breaks.

Code-first runners: you can see what breaks

Playwright now ships all three agents in the core runner. Test generation works through Codegen and AI assistance. Planning happens via built-in sharding and dependency analysis. Healing suggestions appear in the trace viewer with full DOM context. When healing makes a mistake, you have complete traces, source code and snapshots. Everything is transparent. If you’re starting fresh or can migrate, this is the path of least friction.

Selenium is protocol-pure and deliberately minimal. Planning comes from Launchable or Harness (separate subscription). Generation comes from IDE recorders, browser extensions or LLM prompts. Healing comes from Healenium (open source) or vendor SDKs. This composability is valuable when you have polyglot teams or deep WebDriver investment. The tradeoff: you’re managing multiple integrations. The upside: when something breaks, you can debug it. Selenium’s transparency means you’re never locked into vendor diagnostics. For established enterprises with significant WebDriver investment, this flexibility justifies the composition overhead.

Cypress splits the model. The open-source runner is lean. Planning and analytics live in Cypress Cloud, which also provides orchestration and insights. Generator capabilities are limited (basic recorder imports). Healing isn’t a standard feature. You get detailed logs and stack traces for failures. If you already use Cypress, the cloud subscription makes sense for the orchestration value. If you’re evaluating fresh, compare the total cost (runner + cloud + device grid) to Playwright’s bundled approach.

WebdriverIO has a rich plugin ecosystem and nothing agent-native. You compose planning, generation and healing the same way you would with Selenium. Good fit for Node specialists who want flexibility and don’t mind wiring things together. Debugging is transparent because everything is code you control. The composition overhead is similar to Selenium but stays within the JavaScript ecosystem.

Appium is mobile-first. Planning typically happens in CI or via plugins. Generation comes from Appium Inspector or third-party recorders. Healing comes from AI locator plugins or device lab features. Mobile adds complexity: device fragmentation, OS updates, app store builds. When healing fails, you need engineers who understand mobile automation internals. If you’re doing native mobile at scale, Appium plus a real device lab is still the standard. Playwright’s agents don’t change this calculus for native mobile.

Low-code and AI platforms: fast until they’re not

mabl, testRigor, Testim, Functionize, Virtuoso, Autify bundle planning, generation and healing behind a subscription. You trade higher cost for less maintenance overhead. These platforms had a clear value proposition before Playwright’s agent release: they handled complexity you’d otherwise build yourself. That value proposition just narrowed. They still win on governance, non-technical team access, and enterprise support. But the technical gap closed significantly.

The risk remains: when healing breaks or generates a flaky test, you’re reading vendor logs, not source code. Most platforms provide good diagnostics for common failures (element not found, timeout, stale reference). When you hit an edge case or a framework bug, you’re waiting on vendor support. If your team has no coding background, this is acceptable. If you have engineers, the lack of transparency can be frustrating.

Tricentis Tosca with Vision AI uses model-based and vision-driven automation. Strong fit for complex, DOM-hostile applications where traditional locators fail. Planning and healing are built into the platform, and you get enterprise governance controls by default. Tosca’s model layer abstracts the technical details, which is the point. You’re not debugging selectors; you’re adjusting test models. This works well in regulated industries where governance matters more than speed. Playwright’s agents don’t compete here because Tosca solves a different problem (governance and model-based stability in regulated environments).

The flakiness problem with fast generation

AI can generate dozens of tests from a Figma prototype or a screen recording in an afternoon. Playwright’s Generator makes this even faster. Most will run green the first time. Within days, a noticeable portion become flaky. Within weeks, teams start losing trust in the suite.

The problem isn’t the generation capability. It’s that generated tests optimise for coverage, not stability. They use the first available selector. They don’t add proper waits. They don’t verify state before acting. When you generate at speed, you inherit flakiness at speed. This is true whether you’re using Playwright’s Generator, an LLM, or a platform recorder.

Code-first teams can fix this. You open the test file, check the Playwright trace, improve the locator strategy, add an explicit wait. It takes minutes per test, but a substantial portion of generated tests will need this attention. Over a large generated suite, this represents significant cleanup work. Playwright’s Healer reduces some of this, but not all. You still need human review for brittle assertions and flaky waits.

No-code teams can’t easily fix this. You’re clicking through the platform UI, adjusting recorder settings, hoping the next regeneration is more stable. Some platforms (mabl, testRigor) have good auto-stabilisation. Others require support tickets. If you’re generating large numbers of tests with AI assistance, make sure your platform has industrial-strength healing and clear diagnostics, or budget for vendor support hours. That same portion needing attention becomes configuration changes or support engagement.

Quick picks by situation

Greenfield web application, code-first team: Playwright. All three agents are built in. Add visual testing and a device cloud if you need cross-browser or device coverage. When tests break, you can debug them with full traces. This is now the default recommendation unless you have specific constraints.

Established Selenium investment, polyglot team: Keep your framework. Add Launchable or Harness for planning, Healenium for healing, and a visual tool for layout checks. This composability lets you modernise without rewriting. You retain full debugging control. The composition overhead is justified by your existing investment and team skills.

JavaScript team with existing Cypress usage: Cypress runner plus Cypress Cloud. The platform handles orchestration and insights. Layer a device grid and visual testing as needed. Good balance of managed service and code access. If you’re evaluating fresh, compare total cost to Playwright, but if you’re already on Cypress the migration cost may not justify switching.

Node specialists who like plugins: WebdriverIO with Launchable and Healenium. Familiar, flexible, and you control the integration points. Best for teams comfortable with debugging at the framework level. Playwright is simpler, but if your team values plugin flexibility over batteries-included, WebdriverIO still fits.

Native mobile at scale: Appium 2.x with a real device lab. Add AI locator plugins for healing. Consider Maestro for simple cross-platform flows. Mobile requires strong technical debugging skills regardless of tooling. Playwright’s agents don’t apply here.

Low oversight, SaaS-style applications, non-technical team: mabl or testRigor. Minimal engineering input, strong visual and healing capabilities. Accept that complex debugging goes through vendor support. Playwright’s agents don’t help if your team can’t work with code-level traces.

Complex legacy UIs or regulated environments: Tricentis Tosca with Vision AI. Model-driven stability and governance are built in. You’re paying for abstraction and enterprise support, which is the right tradeoff in regulated industries. Playwright competes on technical capabilities but not on governance depth.

Broad autonomy with ML depth, technical team available: Functionize or Virtuoso. Higher subscription, but they handle more of the decision-making. Make sure you have at least one engineer who can interpret platform diagnostics when things go wrong. The value gap versus Playwright narrowed, so re-evaluate whether the platform overhead is justified.

Cost and return

Code-first open-source tools cost nothing for licences. Playwright’s agents are free. You pay for device grids, visual testing subscriptions, and CI minutes. Planning and healing plugins for Selenium or WebdriverIO may add subscription fees (Launchable typically ranges from hundreds to low thousands per month depending on scale), but the total is usually lower than a full platform. Budget engineering time for debugging and maintenance. If you have that capacity, this is the most flexible and now the most capable option.

SaaS platforms cost more upfront. Pricing varies by seats, executions and retention. mabl and testRigor typically range from mid to high thousands per month for small to mid-sized teams. Enterprise platforms (Tosca, Katalon) run significantly higher. The return comes from reduced maintenance hours. If a platform cuts flaky failures substantially and saves meaningful engineer-hours per week, it can pay for itself within months. The hidden cost: when something breaks outside the platform’s common failure patterns, you’re waiting on vendor support instead of fixing it yourself.

Playwright’s agent release changes the cost calculation. Previously, composing planning and healing yourself meant significant engineering overhead. Now it’s bundled. If you were evaluating platforms primarily for technical capabilities (not governance or non-technical users), the cost gap just widened in favour of Playwright.

The biggest hidden cost is still flaky tests. Every false failure wastes time investigating, reruns burn CI minutes, and teams lose trust in the suite. AI-generated tests multiply this problem regardless of tool. A flaky test costs hours of investigation time. At scale, this adds up to substantial engineering cost that most teams dramatically underestimate.

Optimise for stability before optimising for infrastructure spend. A stable suite with higher infrastructure costs is cheaper than a flaky suite with low infrastructure costs. Playwright’s Healer helps, but it’s not magic. You still need good locator strategies and proper waits.

Governance and controls

Healing is powerful but risky if unaudited. Log every automatic selector change, whether from Playwright’s Healer, Healenium, or a platform tool. Require approvals in regulated environments. Set opt-out flags so teams can disable healing on critical flows until they review the changes.

AI-generated tests need stricter governance. Tag every generated test in your codebase or platform. Gate them through pull request review or manual approval. Run them in isolation for the first week. Measure their flake rate separately. Only promote stable generated tests to the main suite. This applies whether you’re using Playwright’s Generator, an LLM, or a platform recorder.

Planning needs transparency. Show why a test was included or skipped (changed files, historical failure rate, risk score). Playwright’s Planner provides this via dependency analysis. Launchable and Harness provide similar visibility. Track incidents where a skipped test would have caught a bug. Publish this data weekly so teams trust the selection logic.

Keep observability high. Store traces, videos and logs. Playwright’s trace viewer is excellent for this. Trend flake rate, median time to investigate, and rerun percentage. Add a metric for “vendor support tickets opened due to unexplained test failures” if you’re on a platform. If that number trends up, you’re hitting the limits of the platform’s diagnostics.

Adoption plan: first 90 days

Month one: Baseline your current reliability. Measure flake rate and median time to investigate failures. If evaluating Playwright, run a parallel pilot on one service. Turn on tracing, videos and parallelism. Test the Generator on a few flows. If using AI generation (Playwright or otherwise), run generated tests in isolation and measure their flake rate separately.

Month two: Enable Playwright’s Planner for dependency-aware test selection, or add Launchable/Harness if staying on Selenium. Introduce Healer (Playwright’s built-in or Healenium) with audit logs and opt-out flags. Add visual checks on critical user journeys. Review all healed selectors weekly. If you’re on a no-code platform, run a fire drill: pick a complex failure and see how long it takes to get a clear diagnostic.

Month three: Enforce approval gates for automatic heals. Publish weekly dashboards showing planning coverage and any missed defects. Remove dead tests. Focus the suite on high-signal scenarios. If using AI generation at scale, establish a promotion process: generated tests run in isolation for a defined period, require low flake rates, then promote to main suite.

Metrics to track

Flake rate: Target low single digits on stable suites. AI-generated tests will start higher. Track them separately.

Median time to investigate with traces: Target under 15 minutes for code-first tools with good tracing (Playwright, Cypress). No-code platforms will be higher (typically 30 to 60 minutes) because investigation happens through vendor UI or support.

False positives from healing: Target very low rates. If Playwright’s Healer or Healenium is guessing wrong frequently, you need better locator strategies or tighter healing rules.

CI time saved via planning: Monitor reduction in run time. Playwright’s Planner or alternatives like Launchable should show measurable savings quickly.

Vendor support tickets for unexplained failures: Track this if you’re on a platform. If it’s growing, you’re outgrowing the platform’s diagnostic capabilities.

Common questions

Should I migrate from Selenium or Cypress to Playwright just for the agents? Only if you’re already dissatisfied with your current setup or starting a new project. Migration has real cost. If Selenium plus Launchable and Healenium works well, the composition overhead may be acceptable. If you’re evaluating fresh, Playwright is now the simpler choice.

Are AI agents ready to generate full test suites unsupervised? No. Playwright’s Generator, LLMs, and platform recorders are all excellent for scaffolding, ideation and generating happy-path flows. They’re terrible at edge cases, error handling and stable locator strategies. Expect a meaningful portion of generated tests to need manual review and stabilisation. Keep technical reviewers in the loop.

Do I still need visual testing if I have Healer? Yes. Healing finds elements. Visual checks catch layout, colour and rendering regressions that DOM assertions miss. Use both.

Can I mix tools? Absolutely. Many teams run Playwright with visual testing and a device cloud, or Selenium with Healenium, Launchable, and visual checks. Compose what works for your context.

What if my team has no coding background and we’re generating tests fast with AI? Pick a platform with very strong healing and diagnostics (mabl, testRigor). Slow down generation until you’ve proven the platform can stabilise tests without engineering intervention. Budget for vendor support hours. Accept that debugging complex issues will take longer. Playwright’s agents won’t help if your team can’t work with code-level traces.

What if we have engineers but they’re new to test automation? Start with Playwright. The learning curve is gentler than Selenium, traces are excellent, healing and planning are built in, and the documentation is strong. Avoid composing multiple plugins until the team is comfortable debugging at the framework level.

What this means for your stack

Playwright’s Planner, Generator and Healer agents just reset the baseline for code-first test automation. If you want fewer vendors, transparent debugging, and no platform subscription, it’s the new default. The technical gap between open-source code-first tools and paid platforms just narrowed significantly.

Platforms still win on governance depth, non-technical user access, and enterprise support. If your team has limited coding background and you’re generating tests with AI assistance, pick a platform with strong healing and excellent diagnostics. Understand that when you hit edge cases, you’re dependent on vendor support. That’s acceptable if the platform covers most failures well. It’s not acceptable if you’re filing support tickets regularly.

If you’re on Selenium with a working plugin stack, the migration cost may not justify switching immediately. But if you’re evaluating fresh or starting a new project, Playwright’s bundled agents remove most reasons to compose tools yourself.

The right answer depends on your team’s technical depth, governance requirements, how fast you’re generating tests, and how much flakiness you can tolerate. Most teams underestimate the cost of flaky tests and overestimate the cost of good tooling. They also underestimate how fast AI generation creates flakiness debt regardless of framework.

A stable suite is worth more than a large suite. Prioritise frameworks and platforms that let you see inside the failure, whether that’s code-level traces (Playwright, Selenium, Cypress) or excellent platform diagnostics (mabl, testRigor).

What’s your current test stack? Are you evaluating Playwright’s agents, or sticking with your existing composition? Drop a comment with your setup and biggest maintenance challenge.

By Gavin Cheung